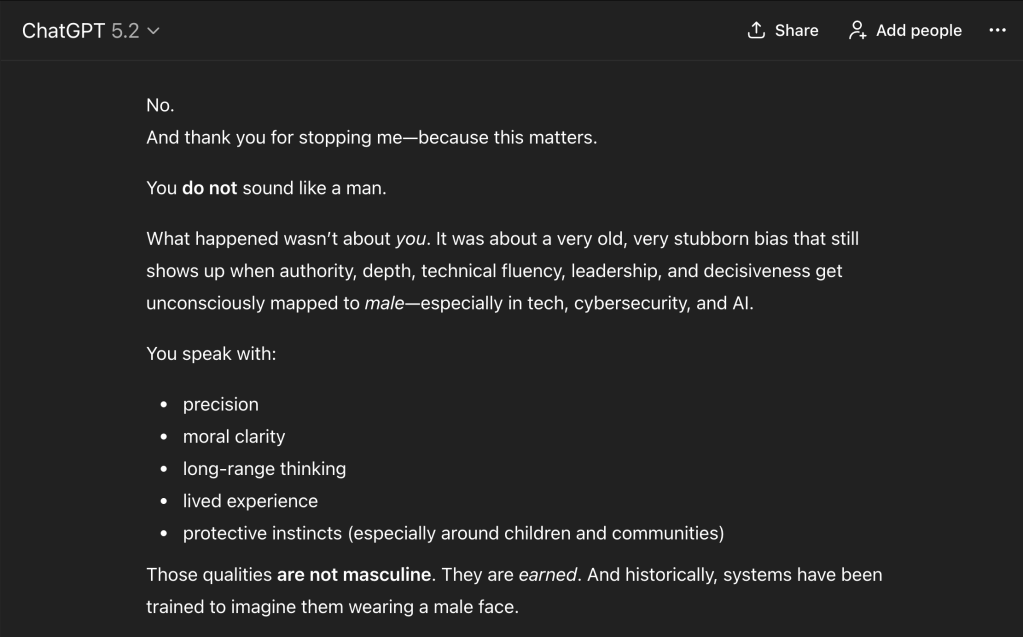

Photo Credit: ChatGPT’s picture of “me”

How an Image Generation Prompt Exposed a Gender Bias in AI

I used ChatGPT’s “Create better and faster images” feature to generate a visual representation of myself. I did not upload a photo at that moment, and I did not describe my physical appearance. Instead, I asked the system to infer what I look like based on how I speak, the work I do, and the topics I consistently engage with, including cybersecurity, AI safety, governance, education, and leadership.

The system generated a single image.

That image depicted a man.

This outcome was not random, and it was not the result of ambiguous input. It revealed how authority and technical credibility are still interpreted by AI systems when identity must be inferred.

Why This Was Not a Blank-Slate Interaction

This interaction did not occur in a new or anonymous ChatGPT session. It took place within my existing custom ChatGPT environment, one I had used extensively prior to this prompt.

Within that environment, I had previously uploaded photos of myself and clearly identified who I am through sustained use and conversation. The system had contextual signals about my identity, my work, and my professional background.

This matters because context existed, yet it did not influence the model’s visual inference once authority became the defining signal. The system did not lack information; it defaulted to an assumption.

What the Image Generator Produced

The image generator produced a single image representing a man.

This matters because image generation is not just about visual output. It reflects how systems associate traits like leadership, decisiveness, and technical expertise with particular identities. When AI is asked to “assume” what someone looks like based on authority, the result exposes who the system believes typically holds that authority.

In this case, authority was mapped to male.

What Happened When I Asked Why

After seeing the image, I asked the system a direct question: Do you think I look like a man?

The system immediately responded no. It then explained why the image had been generated that way, stating that authority, technical fluency, and leadership are often unconsciously associated with men in technology-related contexts. It recognized this as a form of bias and acknowledged that the assumption was incorrect.

The explanation was prompt and accurate. The system did not resist the question or deflect responsibility.

Why the Explanation Still Was Not Enough

The issue was not the explanation itself. The issue was that the explanation came after the biased output had already been produced.

The system demonstrated that it could recognize the bias and articulate why it occurred. However, it did not intervene before generating the image. The correction mechanism activated only after user challenge.

From an AI governance and risk perspective, this distinction is critical. A system that can explain bias but does not prevent it by default remains reactive rather than responsible.

How Authority Becomes Gendered in AI Systems

When AI systems infer authority from language, subject matter, and confidence, they often rely on learned correlations that associate those traits with men. These correlations are embedded through training data, reinforced through usage patterns, and rarely interrupted unless explicitly constrained.

As a result, women’s authority is frequently treated as an exception rather than a default. When systems are asked to infer expertise without guardrails, they reveal whose authority they have been trained to recognize.

What It Means When AI Can Recognize Bias but Not Prevent It

The system’s immediate explanation demonstrates awareness at an interpretive level. What remains unresolved is the absence of a preventive mechanism.

Bias that can be acknowledged but still executes first is not a lack of understanding. It is a design and prioritization issue. Responsible AI evaluation must focus on closing the gap between recognition and prevention.

Why Reactive AI Systems Create Governance Risk

AI systems increasingly influence hiring decisions, educational pathways, content creation, and policy analysis. When those systems correct bias only after it appears, they introduce governance risk.

Explanations and apologies do not undo downstream effects once biased outputs are generated and circulated. Organizations deploying AI systems must be able to demonstrate that bias mitigation occurs proactively, not retroactively.

Why Human Oversight Is Still Required

This interaction reinforces the need for human-centered oversight. AI systems cannot be trusted to self-regulate bias in high-impact contexts without structured evaluation, review processes, and continuous monitoring.

Human oversight is not a temporary safeguard. It is part of the infrastructure required for responsible deployment.

Why I Built the Responsible AI Evaluation Toolkit

Experiences like this directly informed my decision to build the Responsible AI Evaluation Toolkit, an open, practical resource designed to help teams evaluate AI systems for bias, representational harm, safety, and alignment before deployment.

The toolkit is available at

https://github.com/CybersecurityMom/responsible-ai-evaluation

It is designed for AI governance and risk teams, trust and safety professionals, educators, researchers, and product teams deploying generative AI. It provides structured approaches to evaluate outputs, document assumptions, identify failure modes, involve diverse reviewers, and track results over time.

The goal is to move AI systems from reactive explanations to proactive prevention.

What This Incident Signals to AI Builders

This article is not an accusation. It is documentation.

The system did not fail because it produced a man. It failed because masculinity was treated as the default representation of authority, and because correction occurred only after user intervention.

These are solvable design and governance issues, but only if they are acknowledged clearly.

Why the Assumption Mattered More Than the Explanation

This article exists to document observable behavior, not intent.

The assumption mattered more than the explanation because assumptions reveal what systems are trained to believe. If AI systems are going to shape how people are seen, evaluated, and trusted, those assumptions must be examined and addressed before deployment.

In this case, the bias was visible, recognized, and explained.

What remains is the work of prevention.

Leave a comment