“We make the complex human.”

That’s been the heart of AQ’s Corner since the very beginning, and this new chapter is no exception.

The Spark of Curiosity

Curiosity has a way of refusing to sit still, and this year, it led me straight into the evolving world of AI ethics and responsible design.

That journey began when I was selected for a research cohort on AI UX and career technology, exploring how job platforms use artificial intelligence to shape opportunity.

I was invited to join after sharing an in-depth analysis and discussion on one of the AI-powered career tools; feedback that opened new conversations about bias, usability, and trust in automated systems.

The cohort, which runs from September through December 2025, focuses on understanding how people experience AI during their job search, not just what these systems do, but how they make people feel.

And somewhere along the way, I realized something powerful: understanding AI begins with asking better questions.

The Questions That Changed Everything

As a cybersecurity analyst, my focus has always been on protecting people, their data, their privacy, and their digital footprint. But as I began to examine how AI influences hiring and opportunity, I found myself asking:

- Who checks whether an AI system treats people fairly?

- How do we explain a machine’s reasoning when it ranks one résumé higher than another?

- How do we make trust and transparency understandable to everyday users, not just developers?

Those questions became the foundation for a new kind of creation.

⚙️ Introducing: AQ’s Corner Responsible AI Evaluation Toolkit

This toolkit was born out of research, reflection, and a deep desire to make AI accountability practical.

It’s an open-source framework designed to help anyone- educators, researchers, small businesses, and technologists, evaluate AI systems for:

✨ Fairness

🔒 Privacy & Security

🪞 Transparency & Explainability

📊 Accountability

It aligns with NIST, CISA, and FATE frameworks for fairness, accountability, transparency, and privacy, but it’s written in plain English.

No jargon. No code. Just structure, understanding, and care.

💬 “You don’t need to be a developer to do ethical work.”

How It Started

During my AI UX cohort, we met regularly as a group to discuss user experience findings, tool behavior, and real-world feedback from job seekers using AI systems. Those sessions sparked conversations about how design decisions, even small ones, can affect fairness, clarity, and trust. Outside of those meetings, I began diving deeper on my own, testing a variety of AI tools: résumé screeners, job matchers, recommendation systems, and chatbots.

Each time, I documented what I found:

- Did it explain its reasoning?

- Could users correct inaccurate results?

- Were privacy and consent disclosures clear and visible?

After dozens of notes, peer discussions, and late-night realizations, I saw the gap clearly: We didn’t need more AI systems; we needed better ways to evaluate them.

That realization became the heartbeat of the Responsible AI Evaluation Toolkit, a hands-on framework for anyone who wants to explore AI with clarity, empathy, and ethics in mind.

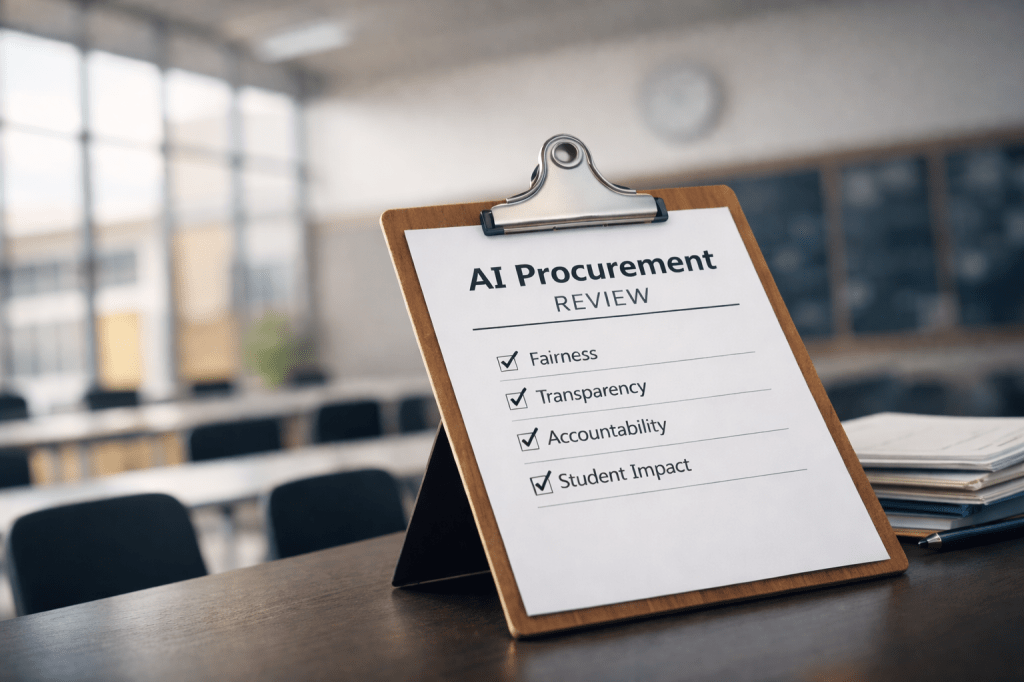

What’s Inside the Toolkit

- Step-by-step checklists to assess fairness, privacy, and transparency.

- YAML templates for documenting findings clearly and consistently.

- Risk registers to track improvements and accountability.

- Issue templates for bias, privacy, and security concerns.

- A fictional example called “ResumeAI” that shows how an assessment might look in practice.

ResumeAI is a generic, educational example, created only to illustrate ethical testing methods. It’s not tied to any company or product.

What It Means for My Journey

This project represents more than another GitHub repo; it’s a pivot toward AI governance, ethics, and digital trust. Through this process, I’ve learned that responsible AI isn’t about slowing innovation; it’s about steering it with purpose.

And for me, this journey is an extension of everything AQ’s Corner stands for:

bridging technology, humanity, and digital wellness.

What’s Next

This is Version 1.0, a foundation I plan to build upon.

The next release will introduce light automation: tools that help users validate their checklists and summarize assessments automatically. I envision classrooms, libraries, and small businesses using this toolkit to learn, test, and question AI responsibly.

Because when knowledge is accessible, ethics can scale.

From Curiosity to Purpose

Growth doesn’t always come from the big leaps; sometimes, it’s the quiet pivots that change everything.

This project reminded me that curiosity can lead to clarity, and that even in the fast-moving world of AI, we can still build systems that slow down, explain, and teach. Every framework, checklist, and question inside this toolkit is a reflection of that belief – that ethical technology isn’t a limitation; it’s leadership.

From code to care, that’s AQ’s Corner. 💙

📎 Explore the Toolkit

🔗 AQ’s Corner – Responsible AI Evaluation Toolkit on GitHub

Footnote: About the Frameworks Mentioned

FATE Principles – A globally recognized AI ethics model centered on Fairness, Accountability, Transparency, and Explainability, the cornerstones of trustworthy AI.

NIST Privacy Framework – A U.S. standard that helps organizations manage privacy risks and strengthen public trust.

CISA Secure by Design – A cybersecurity principle that embeds safety into systems from the start, not as an afterthought.

Leave a comment