Parents are constantly encouraged to trust technology. We are told to trust the tools used in schools, the platforms that manage communication, and the automated systems that increasingly influence education, opportunity, and access. What parents are rarely told is how those systems were designed, or whether safety was considered before they were put into use.

In technology, safety is not something that can be added after harm occurs. It has to be built in from the beginning. This concept is known as safe design, and it works the same way it does in the physical world.

Why Safe Design Is Not an Extra Step

When parents walk into a school, hospital, or office building with their children, they do not expect to see warning signs about structural failure. They assume the building is safe because safety standards were addressed long before anyone entered the space. Engineers calculated load limits, exits were planned, inspections were completed, and failure scenarios were anticipated.

That is not extra care. That is the baseline expectation.

AI systems should be held to the same standard. Instead, many systems are deployed first and examined later. Risks are often identified only after people have already been affected by automated decisions. Unlike a cracked wall or broken railing, algorithmic harm is usually invisible. Families may never know why a job application was rejected, why a student recommendation was missed, or why access to an opportunity quietly disappeared.

Where AI Shows Up in Family Life

AI is no longer limited to big technology companies or experimental tools. It is embedded in hiring platforms, educational software, scholarship screening, content moderation, and systems that determine access to services families rely on every day.

When safety is not embedded into the design of these systems, AI does not become neutral. It becomes faster at repeating existing patterns and scaling past inequities. Decisions happen quickly, quietly, and often without clear accountability.

This is not a future concern. It is already part of daily life for families.

What Safe Design in AI Actually Means

Safe design in AI means asking hard questions early, before a system affects real people. Who could be harmed if the system is wrong? How might that harm show up? What happens when the system encounters situations it was not designed to handle?

A safely designed AI system does not force decisions when confidence is low. It allows for pause, escalation, and human judgment. It assumes uncertainty will exist and plans for it instead of ignoring it.

Just as important, safe design requires real human oversight. Oversight is not a symbolic review step or a checkbox after deployment. It means people have the authority to question, override, or stop automated outcomes. When “the system decided” becomes the final answer, safety has already failed.

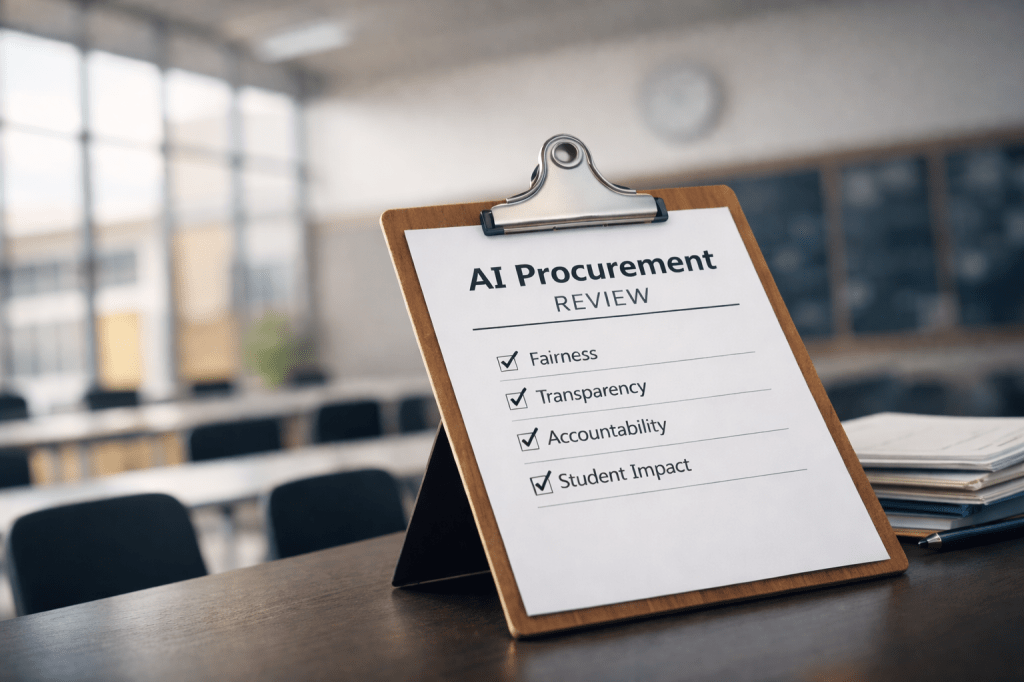

Are There Standards for Safe Design in AI?

Yes, even if parents do not hear about them often.

In the United States, the NIST AI Risk Management Framework outlines how AI systems should be designed with risk in mind before they affect people. While the framework itself is technical, its core ideas are straightforward and familiar. Someone must be accountable for the system. Risks should be identified early. Humans should be able to intervene. Harm should be monitored and corrected.

These principles mirror how safety is handled in other areas of life, from infrastructure to consumer protection.

For families, organizations like Common Sense Media help translate digital safety into everyday guidance. Many parents already rely on Common Sense Media when choosing apps, platforms, and content for their children. Their growing attention to algorithms and AI reflects a broader recognition that technology decisions affect families long before anyone labels them “technical.”

Why This Matters to Me

I approach this topic as both a technology professional and a parent. My background in cybersecurity and governance has taught me that systems fail when risk is treated as an afterthought. In security, we learned long ago that safety cannot be patched on after a breach or retrofitted once damage is done.

The same lesson applies to AI and automated decision-making. The question is not whether systems will fail. The question is whether they were designed to protect people when they do.

The One Question Parents Should Ask

Parents do not need to become technical experts to advocate for safety. One question goes a long way.

What happens when the system is wrong?

If a school, platform, or vendor cannot answer that clearly, then safety was not part of the design. Any AI system that affects children, families, or livelihoods should meet this standard before it is trusted. That expectation is not fear-based. It is informed, responsible, and grounded in how safety has always worked in the real world.

Safe design in AI is not about perfection. It is about accountability, transparency, and protecting people before harm occurs.

Leave a comment