Photo Credit: AQ’S Corner LLC

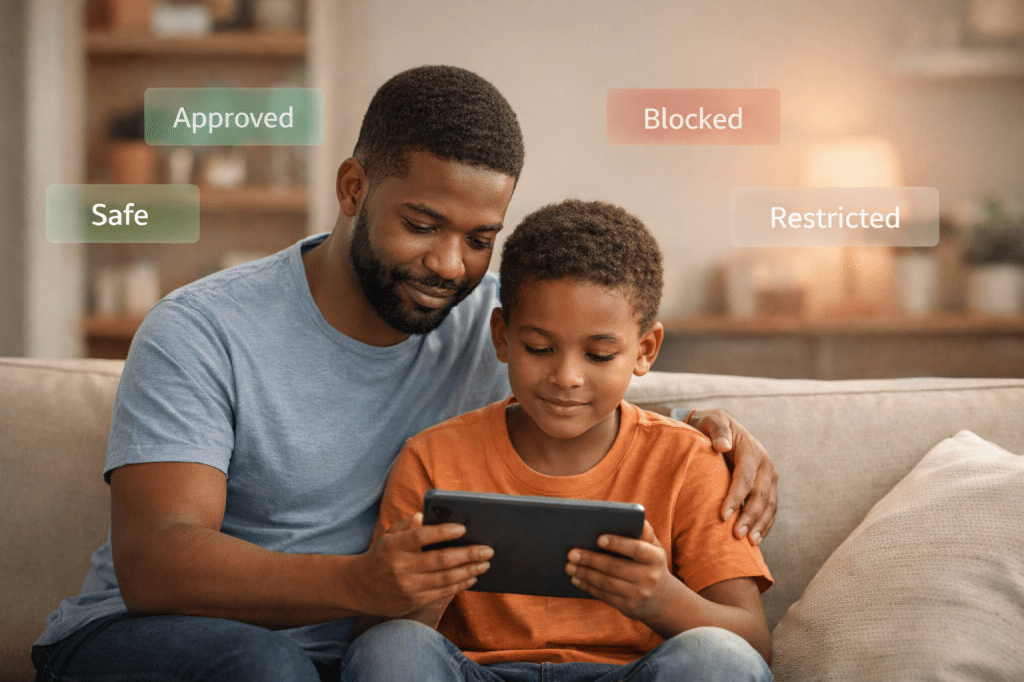

When people think about artificial intelligence, they often picture chatbots, homework helpers, or tools that generate images and text. What rarely gets discussed is a quieter form of AI that plays a much larger role in everyday life. This type of AI does not talk back. It does not explain itself. It makes decisions by sorting people and behavior into categories.

These systems are called classification models, and they are already shaping how families experience the digital world.

A classification system takes information and places it into a predefined category. That category might be approved or denied, allowed or blocked, safe or unsafe. Once the system assigns a label, actions happen automatically. Content is removed. Access is restricted. A request is denied. Most of the time, there is no explanation and no clear way to challenge the decision.

Where Families Encounter AI Classification Every Day

Parents and children interact with classification systems constantly, often without realizing it. Social media platforms use them to decide whether posts are appropriate or should be removed. Streaming services use them to label content as suitable or unsuitable for certain ages. Educational platforms use them to flag behavior, monitor activity, or identify students who may need intervention.

Financial systems rely on classification when families apply for loans, credit, or assistance. Healthcare systems use them to sort patients by risk level or urgency. In each of these cases, the system does not see a full person or a full story. It sees patterns in data and assigns a label.

Once that label is applied, it carries weight.

Why Labels Matter More Than We Think

A label from an AI system can follow someone quietly. A post labeled as inappropriate may be removed without explanation. A child’s online behavior may be flagged as risky even when it reflects curiosity or learning. A family may be denied a service without knowing which data points led to the decision.

These systems are designed for speed and scale, not for understanding context. They move quickly because that is how they were built. Fairness, nuance, and individual circumstances are often secondary considerations.

For parents, this matters because children and teens are growing up in environments where automated systems judge behavior without understanding development, mistakes, or intent.

The Myth That “The Computer Decided”

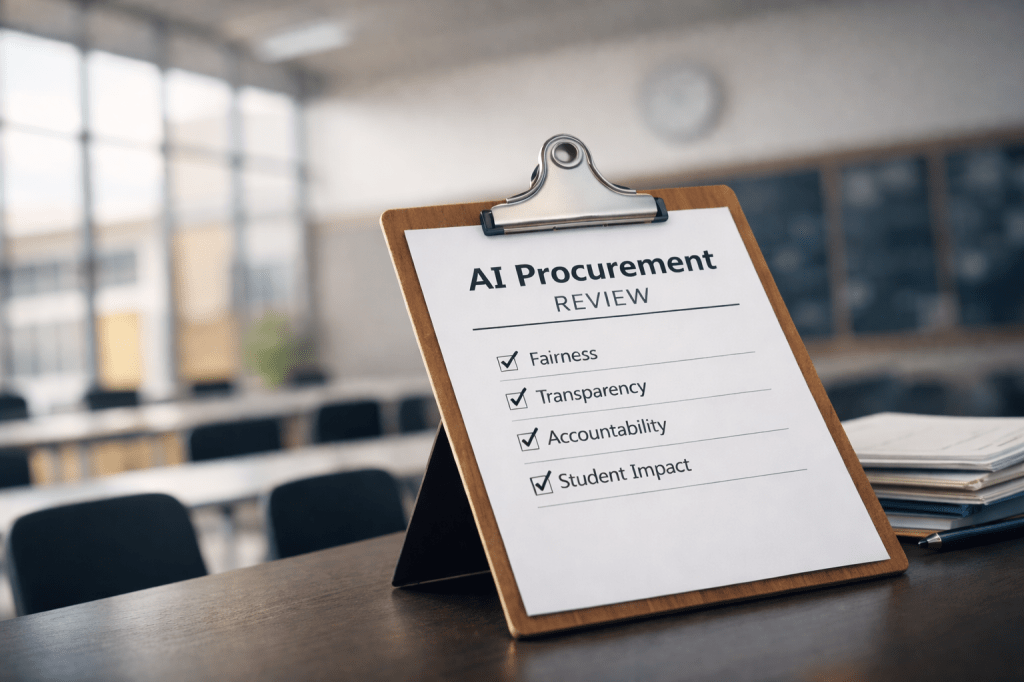

AI systems do not make decisions independently. People design them.

Humans decide what counts as acceptable or risky behavior. Humans choose what data is used and how strict the system should be. Humans decide which errors are acceptable and which are not.

When a system makes a harmful or unfair decision, saying “the computer decided” removes responsibility. The reality is that those decisions reflect human choices made earlier and hidden behind technology. Understanding this helps parents push back against the idea that automated decisions are always correct or unbiased.

Why These Systems Can Be Unfair

Classification systems learn from past data. If that data reflects bias, misunderstanding, or unequal treatment, the system learns those patterns and applies them consistently.

Many of the labels used in these systems are also subjective. What counts as inappropriate language, risky behavior, or suspicious activity depends heavily on culture, age, and context. When those differences are ignored, families and children are more likely to be misclassified. Because these systems operate automatically and repeatedly, small biases can turn into ongoing problems that affect people over time.

What Parents Can Do

Parents do not need to become experts in artificial intelligence to protect their families. Awareness is the first step.

Ask questions about how platforms decide what your child can see or share. Pay attention when schools or apps rely heavily on automated systems. Advocate for transparency and human review, especially when decisions affect access, discipline, or opportunity.

It is also important to talk to children about these systems. Help them understand that being flagged or restricted by a platform does not define who they are. An automated label is a technical judgment, not a moral one.

Why This Conversation Matters Now

AI systems are becoming part of everyday life faster than families can adapt. Classification systems already shape access, visibility, and opportunity, often without explanation or appeal.

For parents and caregivers, understanding how these systems work is part of digital safety. It is not about fear or avoiding technology. It is about clarity, accountability, and making sure technology serves people rather than silently judging them.

AI classification systems may be invisible, but their effects are not. They sort, label, and decide at scale. They influence what families see, what children are allowed to do, and how people are treated by systems they cannot see.

By understanding how these systems work, parents can ask better questions, advocate for stronger safeguards, and help raise children who know that technology should be questioned, not blindly trusted.

Further Reading

For readers who want a plain-language explanation of how automated systems make decisions and what protections exist for consumers and families, the U.S. Federal Trade Commission provides a helpful overview of artificial intelligence and automated decision-making:

https://www.ftc.gov/business-guidance/artificial-intelligence

Leave a comment