Photo Credit: AQ’S Corner LLC

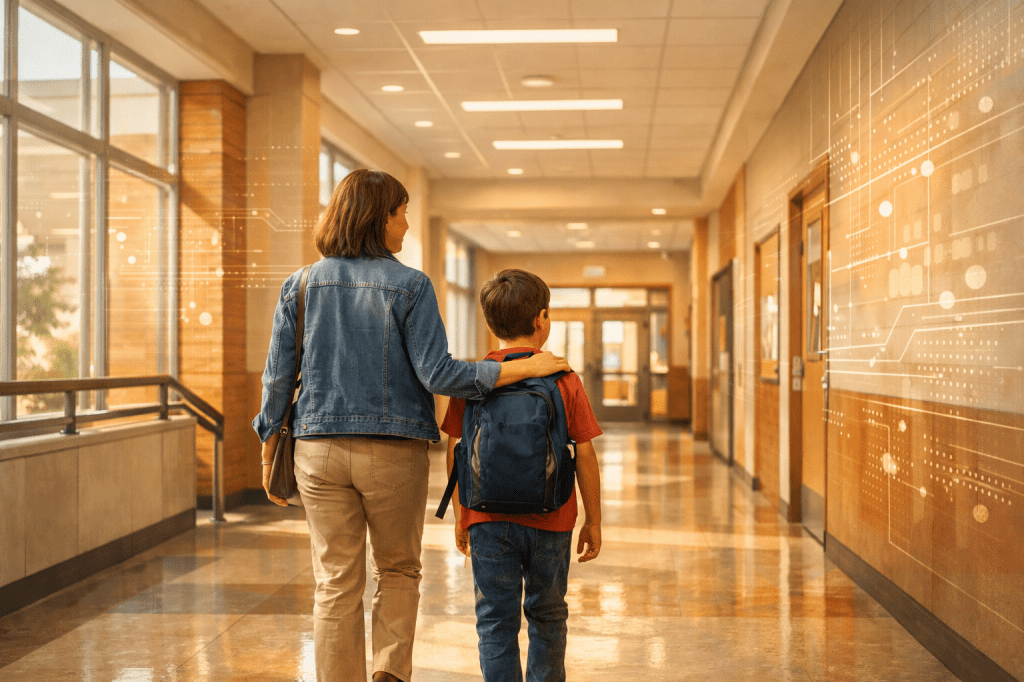

Artificial intelligence plays a growing role in the apps and platforms children use every day. Games, social media, video platforms, messaging apps, and learning tools rely on automated systems to decide what content is shown, which interactions are allowed, what behavior is flagged, and when safety actions occur. These decisions often feel invisible to families, yet they shape children’s digital experiences in powerful ways.

Many of these decisions are made by what are known as black box AI systems. A black box system is one that produces outcomes without clearly explaining how it reached those outcomes. Information goes in and a decision comes out, but the reasoning in between is hidden or difficult to understand. For parents, this means a platform may recommend content, restrict access, or allow certain interactions without offering a clear explanation of why the system made that choice.

Children do not fill out traditional forms when they use apps, but their behavior becomes the input. What they watch, how long they stay engaged, who they interact with, what they click, and what they ignore are all signals collected by AI systems. These signals are analyzed to predict interests, assess risk, and shape future recommendations. Parents and children rarely see how those signals are interpreted or which ones matter most.

This issue is not limited to one company or one type of app. Well-known platforms like Roblox and Instagram are often discussed because of their visibility, but the same decision structures exist across many youth-facing platforms. Gaming apps, short-form video platforms, social networks, chat tools, and even educational apps rely on automated moderation and recommendation systems that operate in similar ways.

Most platforms offer parental controls and safety settings, but these tools often focus on surface-level restrictions rather than decision transparency. Parents may see content warnings, blocked features, or moderation notices without being told what triggered those actions. A report may be dismissed without explanation. Content may suddenly appear that feels inappropriate or confusing. When parents do not understand why the system acted, it becomes harder to guide their children or intervene effectively.

Poor explanation and limited documentation create an accountability gap. Families are told to monitor and supervise, yet the systems making the decisions remain largely opaque. Parents are asked to manage outcomes without being given insight into how those outcomes were produced. This shifts responsibility away from platforms and places it on families who do not control the underlying technology.

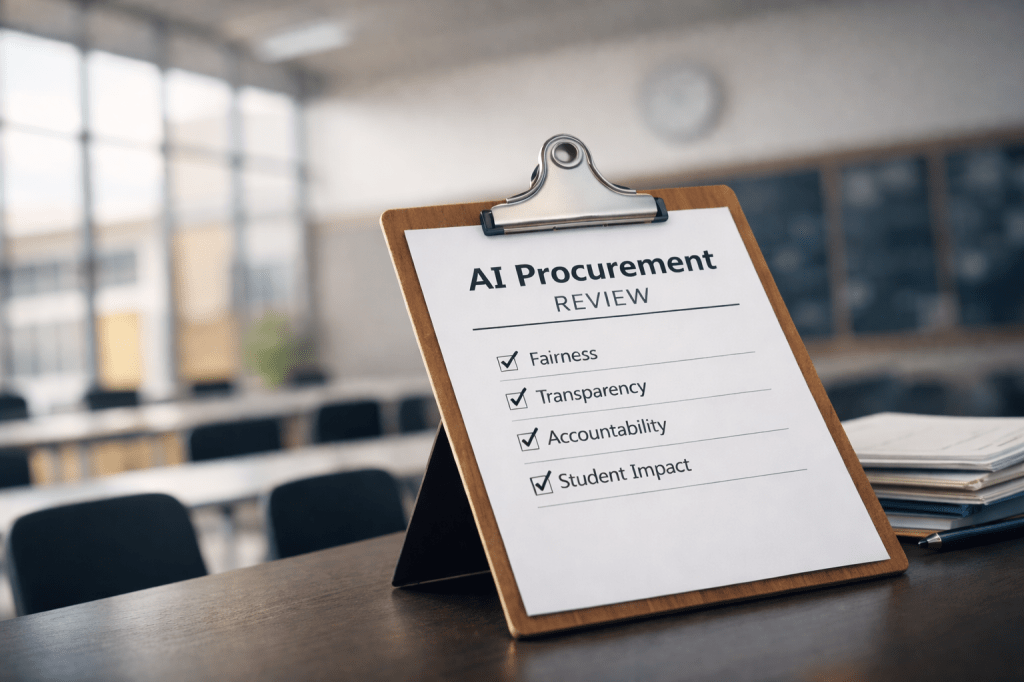

This is why black box AI is not just a technical issue. It is a governance and policy issue. When automated systems make decisions that affect children’s safety, development, and well-being, there must be clear accountability. Transparency does not require companies to reveal proprietary algorithms or trade secrets. It does require platforms to explain, in plain language, how AI systems influence content, moderation, and safety decisions.

From a policy perspective, children represent a population that deserves additional protection. Their digital environments are shaped by systems they do not understand and cannot challenge. Parents need meaningful explanations, not just settings and dashboards. Policymakers need assurance that youth-facing platforms are not operating unchecked behind automated decision systems that cannot be examined or questioned.

As more of childhood moves online, trust in digital platforms will increasingly depend on transparency and accountability. Families are not asking for perfection. They are asking for clarity. When platforms explain how AI decisions are made and where human oversight exists, parents are better equipped to protect their children and guide them responsibly.

Here’s a real example of how a major platform documents its AI systems in policy language. Roblox publicly states that it uses artificial intelligence to scale content moderation and to provide AI-based tools, but the explanation is often technical and directed at developers rather than parents or everyday users.

According to Roblox’s own help documentation, the platform “provides certain generative AI-based tools (collectively, AI Tools) as an option to facilitate your use and/or creation of Virtual Content and Experiences.” The terms go on to define how users may submit prompts, how responses are generated, and the responsibilities of users when interacting with these tools, but they do not clearly explain how AI decisions affect safety, content exposure, or moderation outcomes for children and families.

“Roblox provides certain generative AI-based tools (collectively, ‘AI Tools’) as an option to facilitate your use and/or creation of Virtual Content and Experiences… You remain responsible for ensuring that all Materials… comply with the Terms and these AI Terms.” — Roblox AI-Based Tools Supplemental Terms and Disclaimer (https://en.help.roblox.com/hc/en-us/articles/20121392440212-AI-Based-Tools-Supplemental-Terms-and-Disclaimer)

You can link to the Roblox policy directly in WordPress using this URL:

https://en.help.roblox.com/hc/en-us/articles/20121392440212-AI-Based-Tools-Supplemental-Terms-and-Disclaimer

Black box AI already shapes the digital spaces where children play, learn, and connect. Making those systems more transparent is not about slowing innovation. It is about ensuring that innovation serves children safely and responsibly. Platform accountability, clear explanations, and thoughtful governance are essential if parents are expected to trust the technology their children use every day.

Leave a comment