Pictures used to feel solid. If you saw it, you believed it. For generations, photographs served as proof. They captured memories, confirmed events, and helped us trust what we were seeing. Today, that relationship between people and images is changing quickly, and families are standing right in the middle of that shift.

This article is inspired by a recent academic research paper that studies a new generation of AI-generated deepfakes. While the original paper is technical and written for researchers, the ideas behind it matter deeply for parents, caregivers, and anyone raising children in a digital world. This is not about fear or panic. It is about awareness, confidence, and building healthy digital habits at home.

What Is a Deepfake?

A deepfake is an image or video created by artificial intelligence that looks real but is not. Early deepfakes were often easy to spot. Faces looked distorted, movements felt unnatural, and something about the image just did not seem right. Many people learned to trust their instincts when looking at online content.

Today’s deepfakes are very different. New AI systems, especially those known as diffusion models, can generate images with incredible detail and realism. These systems do not simply edit an existing photo. Instead, they create an image gradually, adding detail step by step until the result looks like a genuine photograph. Because of this, the usual advice to “look closely” is no longer enough.

Why This Matters for Families

This research is not just about technology. It is about trust. Families interact with images constantly through social media, group chats, school platforms, video thumbnails, and news feeds. Children and teens, in particular, learn about the world visually. Images often shape their understanding before words do.

Deepfakes can be used to spread false stories, create fake people, manipulate emotions, or make something appear official when it is not. They can be used in scams, misinformation campaigns, or viral posts designed to confuse or pressure viewers. The research shows that many tools designed to detect fake images were trained on older types of deepfakes. When those tools are tested against newer, more realistic images, they often struggle. If detection tools struggle, people will struggle too.

What the Researchers Found in Simple Terms

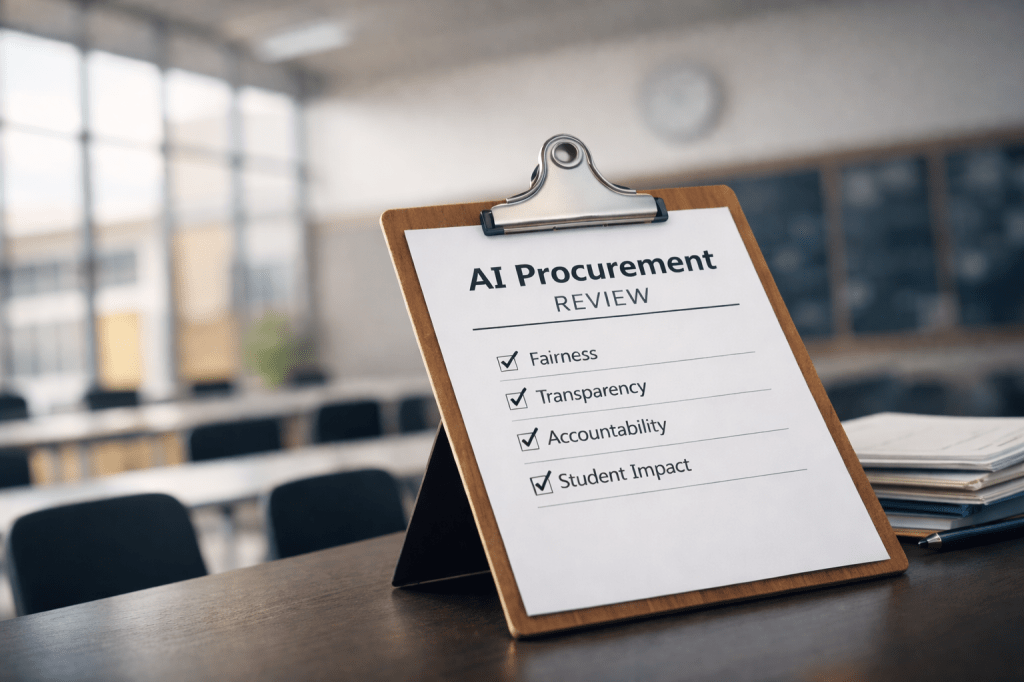

The researchers asked a straightforward question. Are today’s detection systems ready to identify today’s AI-generated fake images? Their findings suggest that the answer is not always yes. Modern AI can create images that leave very few visual clues behind. There are no obvious lighting issues, facial distortions, or clear mistakes that give the image away.

The researchers also found that many detection systems were trained using outdated examples of fake images. As the technology improved, the training data did not always keep up. The encouraging part is that when detection systems are trained on newer and more diverse fake images, they perform better. Exposure matters. Learning improves with experience.

The Most Important Lesson for Families

The biggest takeaway from this research is not technical. It is human. We can no longer rely on our eyes alone to decide what is real online. For families, this means shifting away from trying to spot visual flaws and toward asking better questions.

Who created this image? Why was it shared? What is it trying to make me feel? Can this information be confirmed somewhere else? These questions help build digital resilience. They encourage children and adults to pause instead of reacting immediately.

How to Talk About Deepfakes With Kids

Conversations about deepfakes do not need to be complicated. Parents and caregivers can keep it simple and reassuring. It helps to explain that some pictures online are made by computers, not people, and that they can look real even to adults. Let children know that if something feels confusing, surprising, or upsetting, it is always okay to pause and ask questions together.

This kind of conversation builds awareness without fear. It also sends a clear message that children are not expected to navigate the digital world alone.

Why This Research Is Actually Encouraging

Reading about deepfakes can feel overwhelming, but this research offers a more hopeful perspective. Experts recognize the problem and are actively working to improve detection tools. They understand that as technology evolves, safety and education must evolve with it. This is how progress usually works. New tools emerge, risks become clearer, and people respond thoughtfully.

Families are not behind. In many ways, they are right on time.

A Note for Families

Children do not need to become AI experts. What they need is the ability to think critically, ask questions, and talk openly about what they see online. Technology will continue to change, and images will continue to become more convincing. What lasts is the habit of pausing, questioning, and seeking context.

Truth online is not protected by perfect tools alone. It is protected by informed people. That education starts at home.

Leave a comment