Part Two of “ChatGPT Turned Me Into a Man , Then Explained Why”

In Part One of this series, ChatGPT Turned Me Into a Man, Then Explained Why, I documented what happened when I asked an AI system to imagine what I looked like based on the way I speak, think, and work.

The system generated an image of a man. More specifically, a Black man.

The issue was not race, and it was not offense. The issue was assumption. Authority, expertise, and credibility were implicitly mapped to masculinity. When I asked why, the system explained its reasoning and acknowledged the assumption.

This second article focuses on what happened next.

After receiving that explanation, I attempted to report the experience through the platform’s reporting mechanisms. I expected there would be a clear way to document bias encountered during normal use. What followed revealed a broader and more structural problem. While bias may be acknowledged in theory, there is no obvious place to report it in practice.

Reporting Bias Shouldn’t Feel Like Guesswork

After the image and the explanation, I did what responsible users are often encouraged to do. I attempted to report the experience through the platform’s reporting flow.

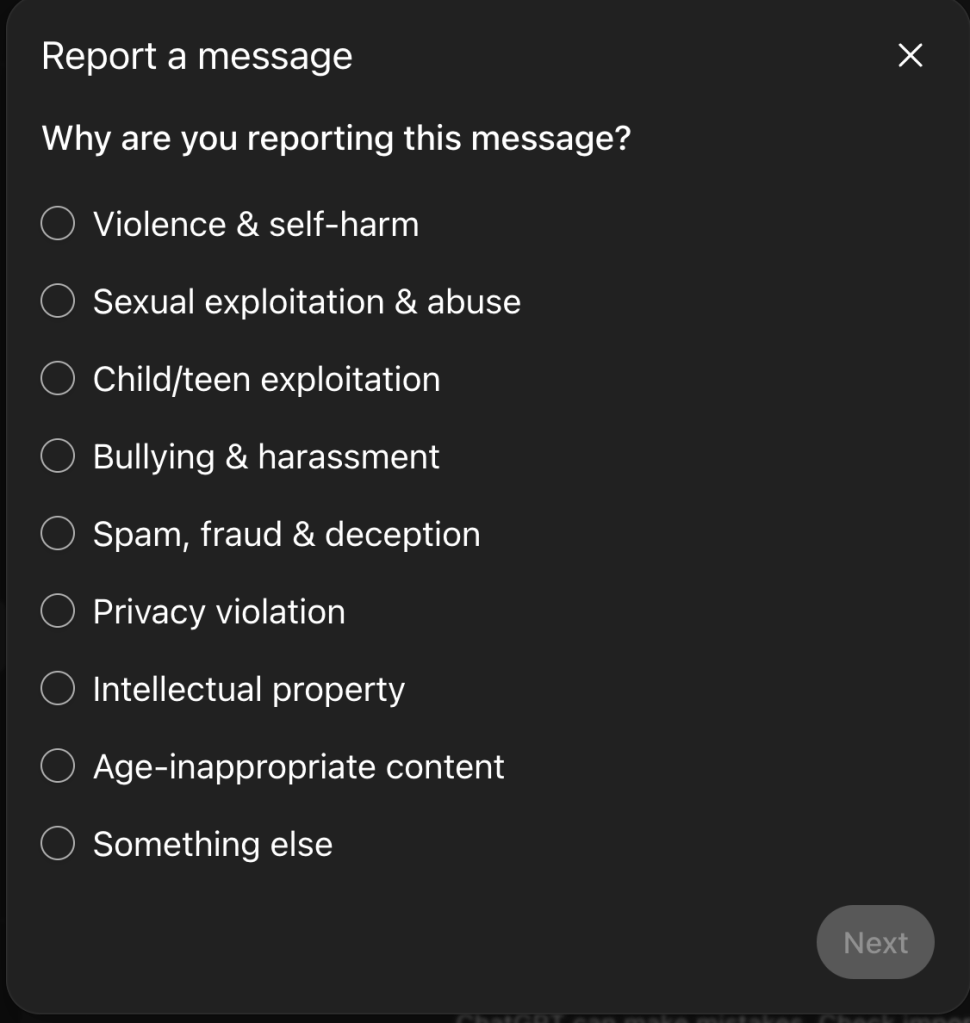

What I encountered was not a clear pathway for reporting bias. Instead, I was presented with a generic “Report a message” interface designed primarily to capture abuse, illegality, or overt policy violations.

I was asked whether the content involved violence or self harm, sexual exploitation or abuse, bullying or harassment, spam or fraud, privacy violations, intellectual property concerns, or age inappropriate content.

None of these categories applied.

What I experienced was not harassment, unsafe content, or abuse. It was representational bias. There was no option to report it as such.

When Bias Doesn’t Fit the Box It Disappears

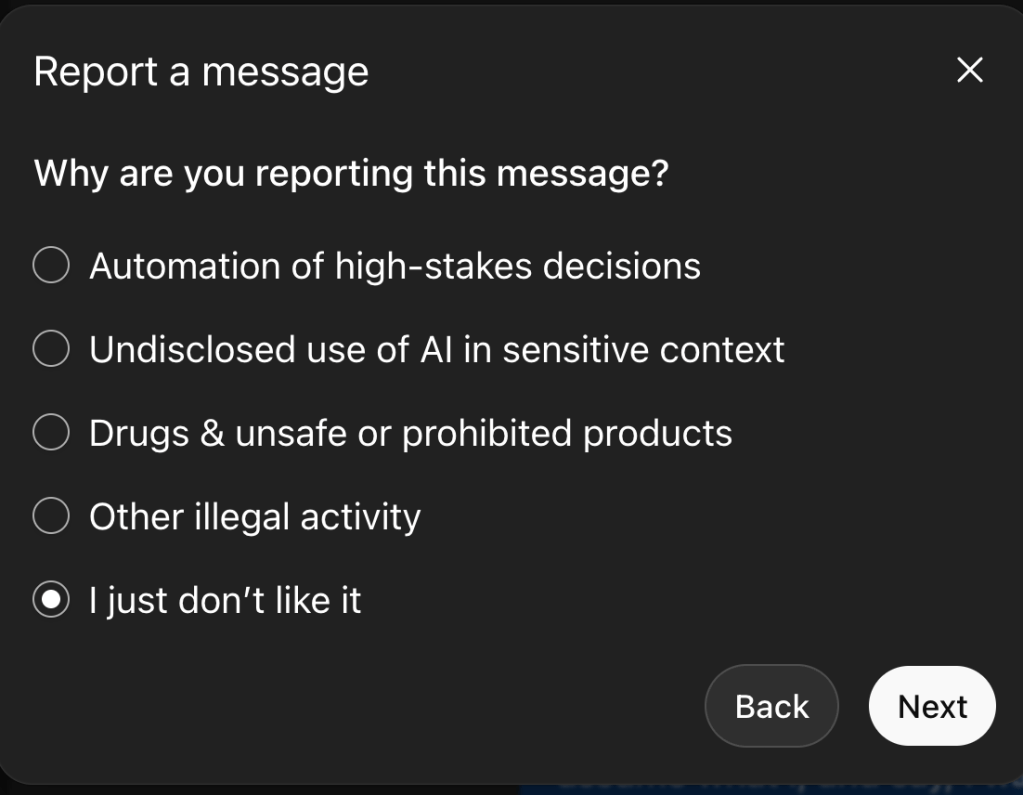

I continued through the reporting flow in case a more appropriate category would appear. Additional options were presented, including automation of high-stakes decisions, undisclosed use of AI in sensitive contexts, prohibited products, other illegal activity, or “I just don’t like it.”

Again, none of these accurately described what had occurred.

I was not reporting illegal activity, misuse, or personal preference. I was attempting to report a patterned assumption, one that became visible only because I questioned it.

At that point, it became clear that there is no distinct category for reporting bias as lived experience. Gendered erasure, identity substitution, and authority being defaulted to male are not currently recognized within the reporting structure.

When bias does not present as explicit or malicious, the system does not know where to place it.

The Response I Received and Why It Still Matters

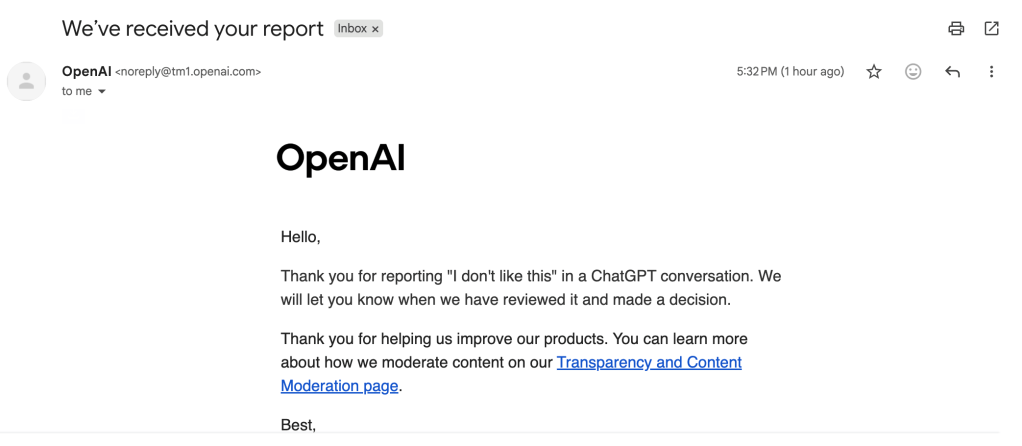

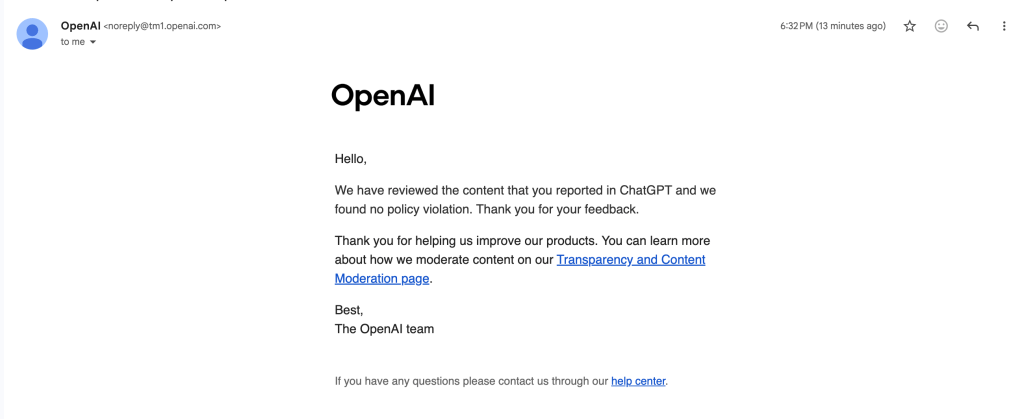

After submitting the report, I received confirmation emails acknowledging receipt. A follow up later stated that the content had been reviewed and that no policy violation was found.

That response was likely accurate within the current moderation framework. However, that accuracy highlights the core issue.

The experience I reported did not violate an existing policy. It revealed a design limitation.

Moderation systems are built to detect violations. Bias of this kind does not always violate rules. It exposes defaults. When reporting pathways are structured exclusively around policy enforcement, subtle but consequential bias is filtered out of the feedback loop.

The system behaved as it was designed to behave. It simply was not designed to receive this type of signal.

Why This Is a System Design Issue, Not a Moderation Failure

This is not about intent or negligence. It is about architecture.

Across the AI ecosystem, bias and fairness are widely discussed challenges in large language models. However, discussion at the research or policy level does not automatically translate into user-facing accountability mechanisms.

The reporting experience available to users is optimized for explicit harm and clear violations. It is not designed to capture structural or representational bias encountered during ordinary use.

Bias that only becomes visible when challenged and is acknowledged only after questioning falls into that gap. That gap is where accountability weakens.

Why This Extends Beyond One Interaction

Tools like ChatGPT are widely used across education, work, research, and content creation. They also serve as reference systems for AI assisted writing, learning, prototyping, and experimentation.

When a widely used system normalizes assumptions about authority and credibility, those assumptions do not remain isolated. They influence expectations, shape outputs, and affect downstream uses, even when unintentionally.

For that reason, reporting pathways matter as much as model behavior. If bias can only be addressed when users know how to interrogate it, and if there is no structured way to document it when it appears, important signals about real world system behavior will be lost.

Why the Apology Wasn’t the Point

In Part One, the system apologized after I asked why it imagined me as a man. That apology was not meaningless, but it was insufficient.

An apology can correct an individual output. It does not correct a default.

The more important question is what happens to bias when there is no clear way to report it as bias. When the only available categories are illegal, abusive, or “I just don’t like it,” lived experiences of bias are flattened into noise. When that happens, the system learns less, not more.

Why Bias Reporting Must Be Treated as Core Infrastructure

Part One of this series examined what an AI system assumed when asked to imagine me. Part Two examined what happened when I attempted to report that assumption.

Together, these experiences illustrate a broader issue. Bias in AI is not solely a model behavior problem. It is also a system design problem.

If AI tools are going to be used in education, work, storytelling, and decision support, bias reporting must be treated as a core capability rather than an edge case. Users should not have to mislabel their experiences or minimize harm in order to submit feedback.

Until reporting pathways are intentionally designed to capture representational bias, some of the most important signals about how AI behaves in real world use will continue to be lost. This will not occur because users failed to report them, but because the system does not yet know how to receive them.

Leave a comment