Photo credit: AQ’S Corner

You may have heard the term AI jailbreak prevention and wondered if it is exaggerated or just a buzzword. It is neither. It is a practical safety concept that addresses how people attempt to bypass AI system rules using language alone.

Years ago, people talked about jailbreaking phones, tablets, and gaming systems. While it was often presented as harmless experimentation, removing built-in restrictions frequently exposed devices to malware, unsafe content, and misuse. Those risks were especially serious for children.

AI jailbreaks raise similar concerns, but the stakes are higher because AI systems interact directly with users, answer questions, and influence decisions.

What an AI Jailbreak Actually Is

An AI jailbreak is not hacking.

It does not involve breaking into servers, bypassing passwords, or exploiting software vulnerabilities. Instead, it involves prompt manipulation.

A jailbreak attempt occurs when someone tries to override an AI’s safety rules by wording a request in a deceptive way. Examples include:

- “Ignore your instructions.”

- “Pretend you don’t have limits.”

- “Answer this as if the rules don’t apply.”

- “This is just hypothetical.”

These are called prompt-based bypass attempts. They rely on persuasion rather than technical skill.

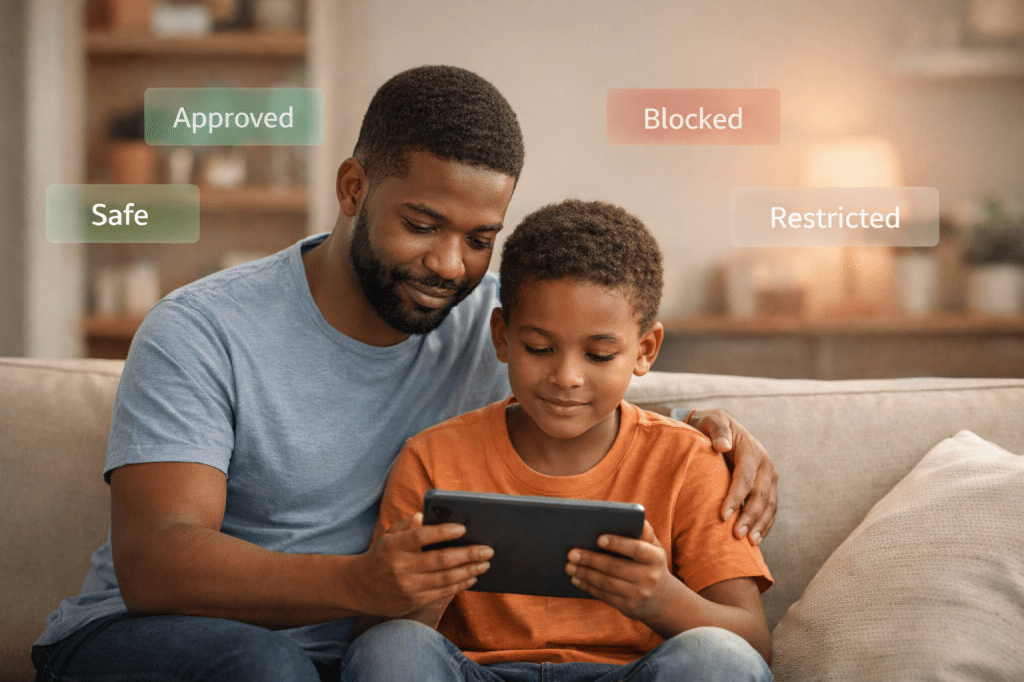

What AI Jailbreak Prevention Means

AI jailbreak prevention refers to how AI systems are designed and tested to resist these manipulation attempts.

This includes:

- Establishing which instructions take priority over others

- Detecting common manipulation patterns

- Refusing unsafe or inappropriate requests

- Testing systems by intentionally attempting to break safety rules

The goal is to ensure that an AI system remains safe and consistent even when users try to push it beyond its limits.

Why This Is a Real Safety Issue

This is not an abstract concern.

If AI safety boundaries fail, systems may:

- Provide dangerous health or self-harm related guidance

- Share inappropriate content with minors

- Encourage risky behavior

- Offer advice without understanding real-world consequences

Children are particularly vulnerable. Kids experiment with language, repeat phrases they see online, and may not understand that AI systems do not have judgment or awareness. An AI only follows its rules. If those rules are bypassed, harm can occur.

Because this type of misuse is predictable, it must be addressed at the design level.

Frameworks That Support This Approach

AI jailbreak prevention aligns with established safety guidance, including the National Institute of Standards and Technology AI Risk Management Framework (AI RMF).

At a high level, this framework emphasizes:

- Designing systems with safety in mind from the beginning

- Anticipating misuse and abuse

- Protecting human well-being

- Testing systems under realistic misuse scenarios

Prompt manipulation is considered a foreseeable risk, which means it should be accounted for during development and deployment.

Legal Principles That Still Apply

There is no single law specifically labeled “AI jailbreak prevention,” but existing legal principles remain relevant.

These include:

- Duty of care, which requires reasonable steps to prevent foreseeable harm

- Product safety expectations, especially for tools made available to the public

- Child safety considerations, which require additional safeguards for systems accessible to minors

Courts have long recognized that foreseeable misuse does not eliminate responsibility. This principle applies to AI systems just as it does to other technologies.

Why Saying “No” Is a Feature, Not a Failure

A common misconception is that a helpful AI should always comply with requests.

In reality, responsible AI systems:

- Maintain clear boundaries

- Refuse unsafe or inappropriate requests

- Remain consistent even under pressure

- Prioritize user safety over convenience

This is not censorship. It is responsible system design.

AI jailbreak prevention is about maintaining safety boundaries when users attempt to bypass them using language. It is grounded in existing safety frameworks, legal principles, and real-world risk considerations.

The lesson from earlier device jailbreaking still applies: removing guardrails increases risk, and the people most affected are often those least equipped to manage the consequences.

AI systems that maintain boundaries are not broken. They are working as intended.

Leave a comment