Photo Credit: Julia M. Cameron

Artificial intelligence tools like ChatGPT are increasingly used in homes and classrooms for homework help, creativity, and general questions. As these tools become more accessible to younger users, many parents are encountering parental controls in an AI context for the first time. While parental controls are helpful, they are often misunderstood and mistakenly treated as a complete safety solution rather than one part of a broader digital safety strategy. To understand their real impact, parents need to look beyond feature announcements and summaries and review the policy documents that explain how these controls are designed to function.

This article explains what parental controls are intended to do, what they cannot do, and why reading the policy matters when parents are making decisions about their children’s use of AI tools.

What Parental Controls Are Designed to Do

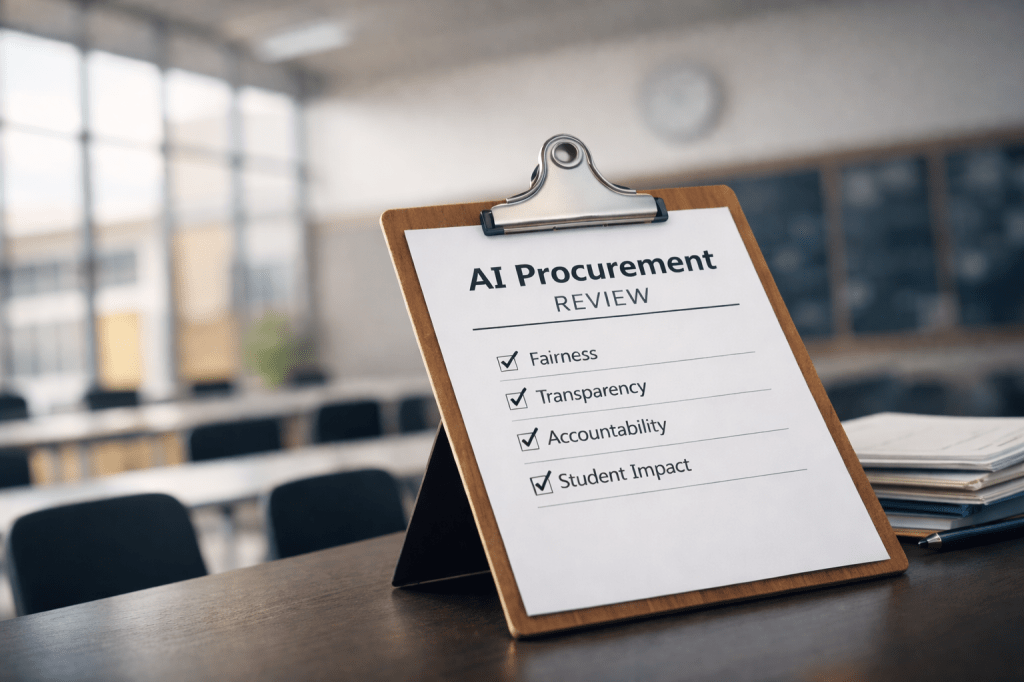

Parental controls in AI tools are designed to limit access to certain features or categories of content based on age or account settings. Depending on the platform, these controls may restrict sensitive topics, require parental approval for account creation, or apply usage limitations. The purpose of these controls is to reduce exposure to potentially inappropriate material and provide parents with some level of oversight, particularly for younger users.

However, these controls are meant to provide guardrails rather than guarantees. AI systems generate responses based on patterns in data, not human judgment or situational awareness. Because of this, parental controls help manage risk but cannot evaluate context or individual maturity levels in the way a human adult can. The policy documentation reflects this distinction by describing parental controls as safeguards that support parents, not as replacements for supervision.

What Parental Controls Cannot Do

Parental controls cannot ensure that every response generated by an AI system will be appropriate for every child. They are not able to reliably assess emotional nuance, developmental readiness, or how a child interprets information. A response that seems harmless to one child may be confusing or upsetting to another, and automated systems are not equipped to make those distinctions consistently.

In addition, parental controls cannot correct misunderstandings. Children may treat AI-generated responses as authoritative or fail to recognize when information is incomplete or inaccurate. These systems do not explain uncertainty or provide context in the way a human adult would. For this reason, most AI policies state, either directly or indirectly, that parents remain responsible for monitoring usage and guiding children. This limitation is often overlooked when parents rely only on marketing summaries or news articles.

Why Feature Announcements Are Not Enough

Feature announcements are designed to communicate updates quickly and in a positive light. They highlight new capabilities and safety improvements but rarely explain how those features are enforced or where their limits lie. As a result, announcements can create an impression of comprehensive protection that does not fully reflect reality.

Policy documents provide the necessary detail. They explain how age is handled, how parental consent is verified, what enforcement mechanisms exist, and what happens when safeguards fail or are bypassed. Parents who rely only on announcements may assume protections are broader or stronger than they actually are, which can lead to confusion or misplaced expectations when unexpected issues arise. Policies are operational documents, not marketing materials, and they define how systems function in practice.

Why Parents Skip Policies and Why That Is Understandable

Most parents are balancing work responsibilities, caregiving, school demands, and constant digital change. Policies are often long, written in formal language, and not designed for quick reading. Skipping them is common and understandable, especially when time and attention are limited.

However, policies still apply whether they are read or not. They govern how the tool behaves and how responsibility is divided between the platform and the parent. Parents do not need to read every word to benefit from reviewing a policy. Focusing on sections related to child safety, parental consent, and limitations can provide meaningful clarity without requiring legal expertise. The goal is awareness, not mastery.

How Reading the Policy Helps Parents Guide Their Children

Reading the policy helps parents understand where automated protections end and where human guidance is necessary. This understanding allows parents to set realistic expectations and explain boundaries to their children in clear, age-appropriate terms. It also prepares parents to respond more effectively when children have questions, encounter confusing information, or experience something unexpected while using AI tools.

When parents understand the limits of parental controls, they are better positioned to encourage children to ask questions and seek help rather than assuming the technology is always correct. Parental controls reduce exposure to certain risks, but informed parenting helps children navigate what remains. Both are necessary for responsible AI use in families.

Where to Read the Official Policy

Parents who want to understand how ChatGPT handles child safety and parental consent should review the policy directly rather than relying on summaries or third-party interpretations.

The official child safety and parental consent policy is available here

https://openai.com/policies/child-safety

While ChatGPT is often the starting point for conversations about AI safety, the same questions apply to any AI-powered tool a child uses. Reading the policy of the specific platform your family relies on is one of the most practical steps parents can take.

Leave a comment