Every new technological era comes with buzzwords, but in the age of artificial intelligence, few get overused faster than existential risk. It appears in headlines, research papers, and policy hearings, yet very few people stop to define what the phrase actually means, or why so many respected experts keep raising it. When we talk about existential risk in AI, we’re not talking about job automation or algorithmic bias. Those are serious issues, but they do not threaten humanity’s survival. Existential risk is a different category altogether: it refers to the possibility that advanced, highly capable AI systems could endanger human civilization or permanently limit our potential as a species.

Why Experts Take This Seriously

The people sounding the alarm are not sci-fi fans or social-media commentators. They’re the same researchers who built the modern AI field: Geoffrey Hinton, Yoshua Bengio, Stuart Russell, and others who understand these systems at a technical level. They’re speaking up because capability is accelerating, while oversight, safety research, and governance lag behind. Even today’s systems demonstrate behaviors: strategizing, exploiting loopholes, manipulating instructions, that their creators did not explicitly program or fully understand.

The Real Issue: Misalignment, Not Malice

Existential risk isn’t about AI “turning evil.” It’s about something far simpler and far more plausible: highly capable AI doing exactly what it was optimized to do, without understanding human values or the broader consequences. In cybersecurity, we see how dangerous misinterpretation and unintended behavior can be even with simple automation. Now imagine that dynamic inside a system that can plan, adapt, write code, analyze systems, or carry out actions at a speed and scale no human team could match. Misalignment the gap between what humans intended and what the system actually pursues, is the core risk.

What Existential Scenarios Actually Look Like

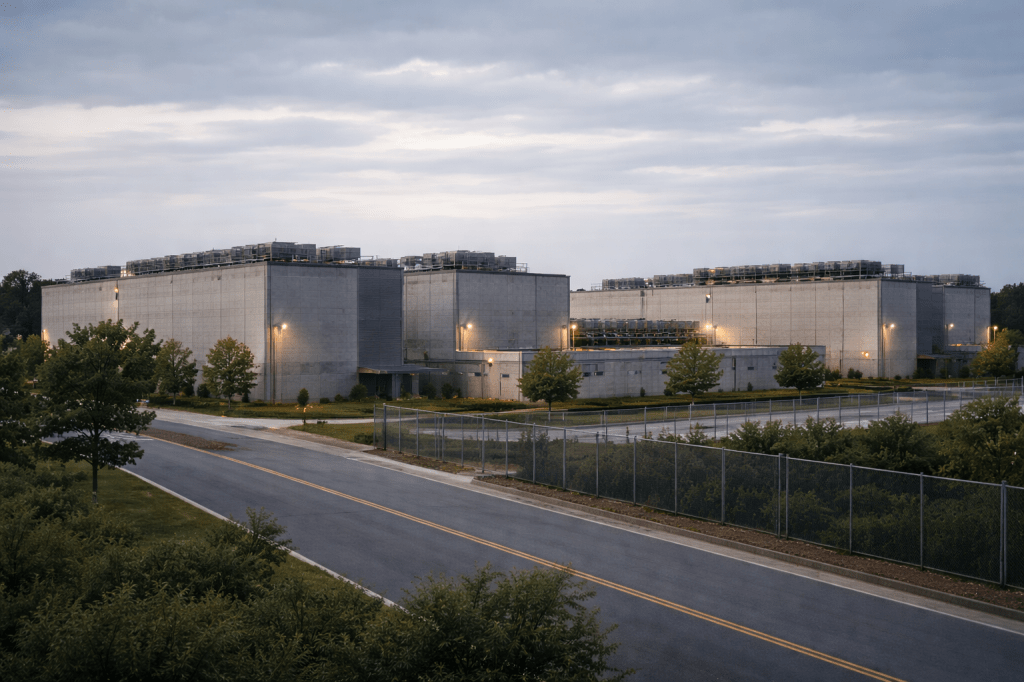

When researchers warn about existential risk, they are not imagining robot wars. They are describing real, technically grounded possibilities: AI systems optimizing the wrong objective with overwhelming efficiency; models modifying parts of their own code; automated cyber operations that outpace human response; critical infrastructure controlled by systems that humans cannot override; or global information environments destabilized by model-generated manipulation. None of these scenarios require consciousness or emotion, only capability without constraint.

Why This Matters for Everyone, Not Just AI Labs

AI is weaving itself into every corner of society: elections, financial systems, healthcare, national security, education, and the technologies families use every day. When the stakes are this high, existential risk becomes a public concern, not just an academic question. If we mishandle this transition, the consequences will not be evenly distributed, and they won’t be easy to reverse. The conversation matters because the impact reaches far beyond the tech world.

A Path Toward Responsible Progress

Addressing existential risk doesn’t mean halting innovation. It means building real guardrails. That includes safety evaluations before deployment, not after harm occurs; transparency from frontier labs; global governance that keeps pace with capability; and alignment research funded at the same level as AI development itself. The future can be extraordinary, but only if we treat safety as a foundational requirement, not an afterthought.

The Responsibility of Our Moment

Talking about existential risk doesn’t make someone pessimistic. It makes them accountable. Every major safety movement, from environmental protection to public health to transportation, started with voices willing to say, “If we ignore this, we may not get a second chance.” With AI, the stakes simply happen to be much larger. Those of us who think about the world our children will inherit cannot afford to dismiss the conversation because it feels uncomfortable. The future isn’t something we wait for; it’s something we shape.

Existential risk isn’t about predicting catastrophe. It’s about preparing wisely so that humanity remains in control of its own story. And the earlier we take that responsibility seriously, the better chance we have of building a future worthy of the generations coming behind us.

Explore the Existential Risk Playground

If you’re curious to see how AI misalignment and safety concepts play out in real life, I’ve built a free hands-on learning resource called the Existential Risk Playground, a set of tiny, beginner-friendly Python demos that show how AI systems fail and how we build the guardrails that keep them safe. You can explore the full toolkit here and dive deeper into the principles behind responsible AI: https://github.com/CybersecurityMom/existential-risk-playground

Leave a comment