Inspired by Nick Bostrom’s “The Fable of the Dragon-Tyrant”

Read it here →https://nickbostrom.com/papers/the-fable-of-the-dragon-tyrant/

Let me start by telling on myself:

I’m not a big sci-fi fan.

Never have been.

The closest I got was watching the old Godzilla movies with my dad, the grainy ones where Godzilla looked like he needed a nap and a chiropractor.

But this dragon story?

This one grabbed me.

And it has everything to do with AI, safety, and the world we’re building right now.

🐲 The Dragon That Everyone Just Accepted

In Bostrom’s fable, there’s a massive, terrifying dragon that demands thousands of people as tribute every day. The whole world reshapes itself just to feed it. Entire industries exist to make the sacrifice process efficient.

And after a while, people stop fighting the dragon.

They stop questioning it.

They just shrug and say:

“Well, that’s life.”

(Hint: the dragon represents aging and death, but the metaphor is bigger than that.)

Then one day, a group of scientists decide:

“What if we don’t accept this? What if we try to slay the dragon?”

After years of work, they build the tool to do it.

They fire the shot.

The dragon falls.

And suddenly the whole kingdom realizes:

“We could’ve done this so much sooner.”

🤖 So… What Does This Have to Do With AI?

Everything.

Because in our world, the dragons are different:

- uncontrolled AI systems

- privacy erosion

- digital manipulation

- online harms

- misinformation

- biased algorithms

And like the people in the fable, we sometimes shrug and say:

“Well, that’s just how the tech world works.”

But it doesn’t have to be.

AI safety is the act of deciding we’re not bowing to the dragon.

We’re not feeding it.

We’re not building railroads to help it consume us faster.

We’re building tools, technical, ethical, and procedural, to keep AI systems aligned with human well-being.

And unlike the fable, we don’t have to wait generations to act.

Beginner-Friendly Breakdown (No Sci-Fi Degree Required)

1. The dragon = a huge problem we treat as inevitable.

In the fable, it’s death.

In our world? Harmful or unsafe AI.

Privacy loss. Deepfakes. Manipulation.

All avoidable if we take it seriously early.

2. The scientists = the AI safety community.

Regular people who say,

“We don’t have to accept this; we can fix it.”

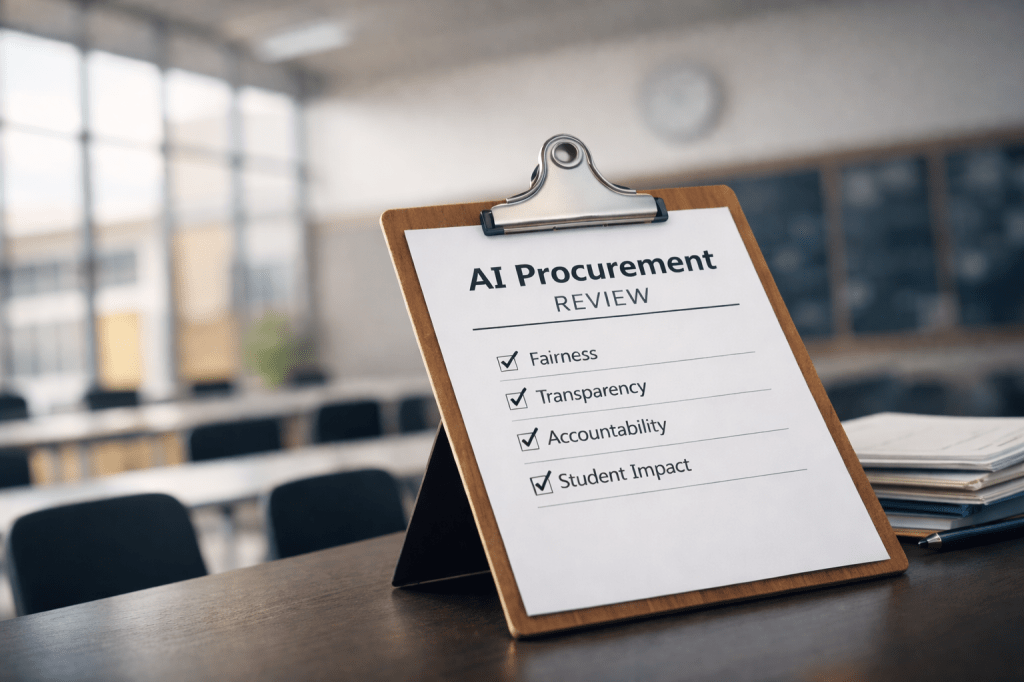

3. The weapon = safe, transparent, responsible AI design.

Not hype.

Not magic.

Just real engineering, governance, monitoring, and accountability.

4. The victory = a future where technology works for us, not over us.

Anyone, and I mean anyone, can grasp this.

This isn’t about math or coding.

It’s about paying attention to things before they become too big, too fast, and too dangerous to control.

We Don’t Need To Love Sci-Fi To Learn From It

I may not be deep in the sci-fi world, but I am a student of learning.

And this fable reminded me of something simple:

Sometimes the biggest danger isn’t the dragon.

It’s the moment we stop questioning the dragon.

AI isn’t here to destroy us, but it could go sideways if we treat it like a force of nature instead of a tool we control. So if you want a story that’s wild, meaningful, and surprisingly funny when you imagine the dragon with Godzilla energy, go read Nick Bostrom’s “The Fable of the Dragon-Tyrant“.

Leave a comment