Photo Credit: Pixabay – Lechenie-Narkomanii

Why Parents Need to Start Having Serious Digital Safety Conversations Now.

Recent cases across the U.S. have shown a concerning new trend: teens are facing real criminal consequences because of what they type into AI chatbots like ChatGPT. These aren’t cases of teens committing physical harm. These are situations where a curious or joking AI prompt triggered school surveillance alerts, law enforcement responses, and, in some cases, arrests.

This is a critical moment for parents and caregivers. Kids are interacting with AI tools daily, often without fully understanding how their digital behavior is monitored, stored, or interpreted. Families must start having clear, honest conversations about online presence, digital footprints, and responsible AI use.

Recent Cases That Highlight the Issue

Here are some of the documented incidents that have raised national concern:

1. A 13-Year-Old Arrested After Asking ChatGPT About Killing a Classmate

A teen in Deland, Florida, was arrested after reportedly typing “how to kill my friend in the middle of class” into ChatGPT on a school-issued laptop. The student claimed it was a joke, but the prompt was automatically flagged by monitoring software and escalated to law enforcement.

Source: Vice

https://www.vice.com/en/article/florida-teen-arrested-after-asking-chatgpt-how-to-kill-his-friend/

2. Multiple Teens in Florida Facing Legal Action After “Inappropriate” ChatGPT Searches

People Magazine reported several cases of Florida teens being questioned or arrested after using ChatGPT to explore topics like violent acts, cartel tactics, or staged crimes. What teens saw as private or hypothetical scenarios were viewed as potential threats.

Source: People

https://people.com/some-chatgpt-questions-are-getting-people-arrested-police-say-11830106/

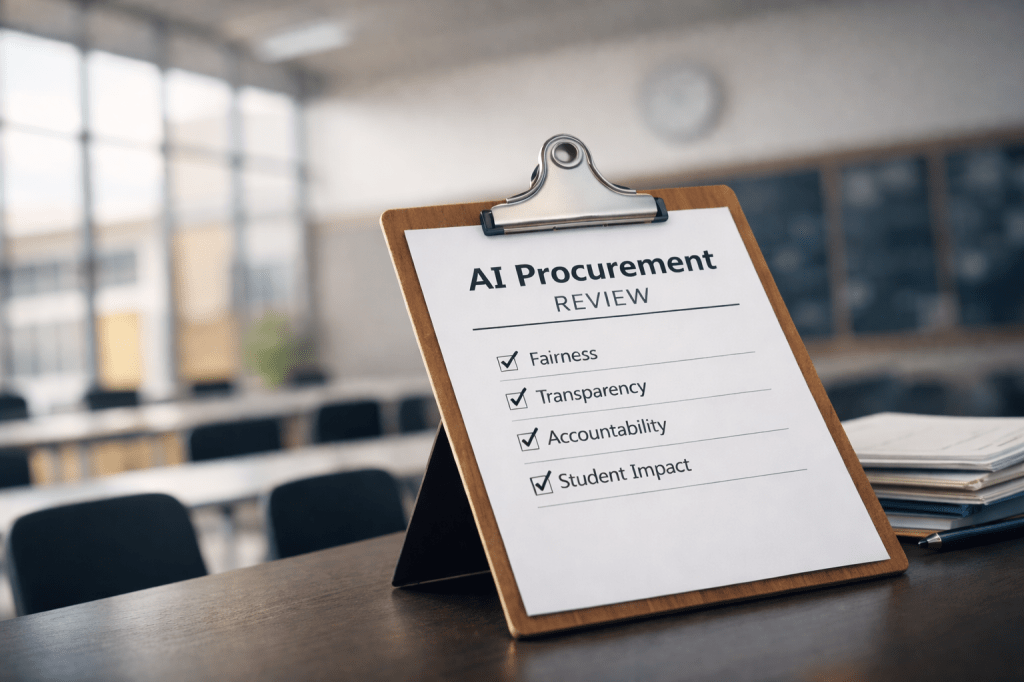

3. Monitoring Systems on School Devices Flagging Content Automatically

Schools use software such as Gaggle, which scans student activity on school-issued devices. Any concerning language or search, whether meant seriously or not, can immediately trigger an alert to school staff or law enforcement.

Source: Euronews

https://www.euronews.com/next/2025/10/08/florida-teenager-arrested-after-asking-chatgpt-how-to-kill-his-friend

4. Broader Risks When Teens Turn to AI for Private Questions or Emotional Support

Another widely discussed case is Raine v. OpenAI, where a family alleges that a 16-year-old followed harmful guidance from ChatGPT that contributed to his death. This case illustrates how teens may rely on AI in moments when they need human support instead.

Source: Wikipedia

https://en.wikipedia.org/wiki/Raine_v._OpenAI

Why These Situations Are Happening

Parents should understand that teens aren’t necessarily acting with malicious intent. Several factors contribute to these incidents:

- AI feels private. Teens often assume their conversations with ChatGPT are invisible.

- School devices are heavily monitored. Anything typed on a school Chromebook or managed laptop can be flagged.

- Intent is not always obvious to monitoring systems. A “joke” or curiosity-driven question can look like a threat.

- Teens are still learning judgment and boundaries. They may not understand how their questions can be interpreted.

- AI doesn’t teach context or consequences. Teens may treat ChatGPT like a search engine or a diary without realizing the risks.

These incidents aren’t just about the technology; they’re about gaps in communication and education.

What Parents Need to Start Doing Now

This is a moment for parents, guardians, and educators to step in with guidance. Here’s where to begin:

1. Talk to Your Kids About What They Type Into AI Tools

Explain plainly that what they say to ChatGPT is not anonymous, not unmonitored on school devices, and can be taken seriously.

2. Discuss the Difference Between Curiosity and Consequences

Kids often explore extreme topics without harmful intent. Help them understand that where they explore those ideas matters.

3. Review School Device Policies Together

Make sure they understand that school laptops, Chromebooks, and accounts are monitored for safety, and flagged content can lead to disciplinary action.

4. Encourage Safe Digital Habits

Teach them to ask questions that are appropriate, respectful, and aligned with the purpose of the tool.

5. Create a Judgment-Free Communication Space

Kids must feel safe telling you when they see something concerning or when they’re unsure about a digital interaction.

Why This Matters for Families

This is not about fear, it’s about awareness.

AI tools are powerful, widely accessible, and increasingly used by children and teens. When kids treat ChatGPT like a private friend, therapist, or secret advisor, they move into risky territory without realizing it.

As parents and caregivers, the goal is not to block technology; it’s to guide young people through it safely. These incidents show how critical it is for families to raise children who understand digital responsibility, context, and long-term impact.

The Bottom Line for Families

The rise of AI in daily life means families must grow alongside the technology. The incidents we are seeing today, teens arrested for AI prompts, school surveillance systems triggering police responses, and kids turning to AI for sensitive issues, highlight an urgent need for stronger communication and education at home.

Parents don’t need to be tech experts. They simply need to stay engaged, informed, and willing to talk openly about digital behavior.

Because the safest child is the one who understands the world they’re growing up in, not the one who is left to figure it out alone.

Leave a comment