Photo Credit: Pixaby

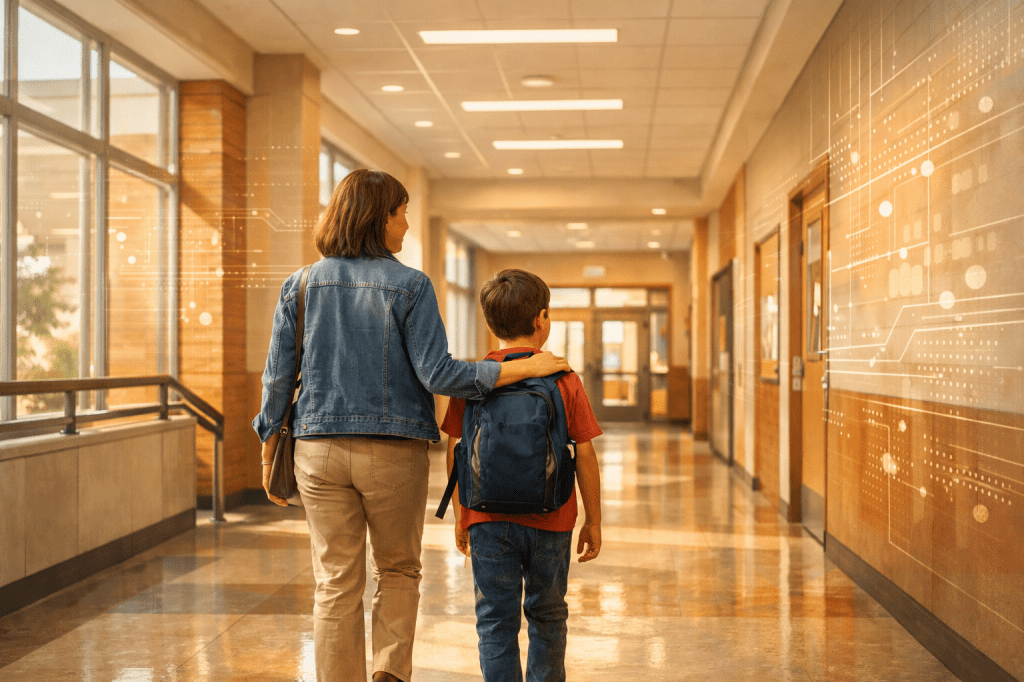

The three top things parents, guardians, and educators bring up to me when we talk about the Internet are human trafficking (and the fear their children could get pulled in), cyberbullying, and too much screen time. And here’s what breaks my heart: when I scroll online and look at cyberbullying, sometimes I can’t tell where the kids end and the adults begin. The same insults. The same pile-ons. The same casual cruelty.

Adults should be the role models here. Instead, too many are showing kids that “logging in and tearing someone down” is the new form of entertainment.

Cyberbullying Has No Age Limit

Cyberbullying isn’t just a middle-school problem; it’s a cultural one. Kids learn by watching us. If they see adults throwing jabs, mocking strangers, or “trolling for fun,” they take it as a green light.

And the consequences are devastating:

- Studies show 40–50% of cyberbullying victims know their bully personally, yet many suffer in silence【pmc†source】.

- The effects aren’t small, they’re life-changing: depression, anxiety, self-harm, slipping grades, even suicidal thoughts.

If we want kids to treat others with respect online, we have to model it first.

The Algorithm Problem

Let’s be honest: people bully. But platforms profit from it. I once saw an influencer explain to his followers that he had to post “controversial stuff” or they wouldn’t even see his content. I thought he was exaggerating until I started digging. Turns out, he was right.

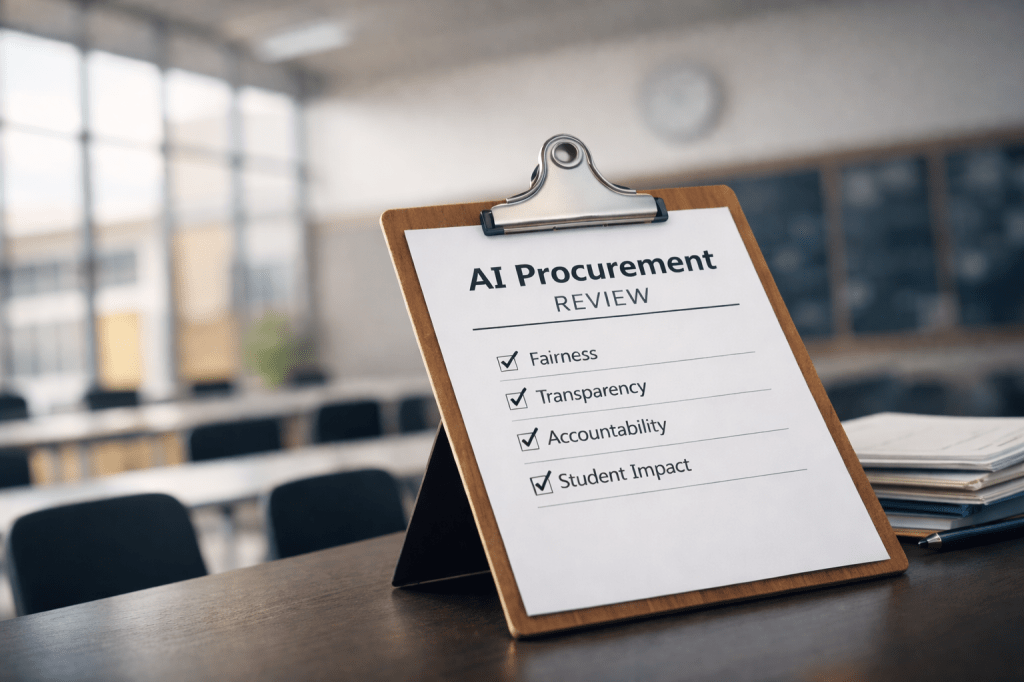

- Researchers at Northwestern University found that social media algorithms prioritize emotionally charged and controversial content, because anger and outrage keep us glued to the screen【northwestern†source】.

- A UCL study showed TikTok feeds shifted from neutral content to harmful, misogynistic videos in less than five days【ucl†source】.

- The Guardian ran an experiment with blank Instagram and Facebook accounts, and guess what? The algorithms still pushed sexist, divisive content even without any user input【guardian†source】.

- And The Washington Post reported that Instagram algorithms often surface violent or disturbing content even for minors because “sensational posts” drive clicks【washington†source】.

It’s no accident. Negativity sells. Outrage spreads faster than kindness. Even “angry” emojis count as engagement. And every click, comment, and share makes someone money.

But Here’s the Hard Truth

We can blame algorithms all we want, but at the end of the day, it’s people who choose to post cruelty. And kids are watching us.

If they see us argue with strangers, mock someone’s photo, or jump into a fight for sport, what do we think they’ll do?

What We Can Do Better

- Hold platforms accountable. Push for transparency. Lawmakers are already debating how to update policies like Section 230 to limit algorithm abuse【time†source】.

- Teach kids a “digital diet.” Not all online content is healthy. UCL researchers compare some feeds to “ultra-processed food” for the brain【ucl†source】. We need to help kids learn what to consume and what to scroll past.

- Model respect. Kids copy what we do. Show them that you can disagree without tearing people apart.

- Pause before posting. Teach children (and remind ourselves) to ask: What’s this making me feel? Do I need to react, or is silence the stronger choice?

A Mirror We Can Rewrite

Right now, the online world is a broken mirror, reflecting our worst impulses back at us. But children are looking into that mirror, too, and what they see becomes who they think they should be.

We have the power to change that reflection. By leading with kindness, by demanding better from platforms, and by refusing to normalize cruelty, we can show kids that empathy is not weakness, it’s strength.

The algorithms may be stacked against us, but our example is still the most powerful algorithm of all.

Sources & Further Reading:

- Studies show 40–50% of cyberbullying victims know their bully personally, yet many suffer in silence – PMC

- Push for transparency. Lawmakers are already debating how to update policies like Section 230 to limit algorithm abuse – Time

- We unleashed Facebook and Instagram’s algorithms on blank accounts. They served up sexism and misogyny – The Guardian

- Dark and strange content is slipping through social media’s cracks – The Washington Post

- Social media algorithms exploit how humans learn from peers – Northwestern University

- Social media algorithms amplify misogynistic content for teens – UCL

Leave a comment